Revenue

$130.00M

2024

Valuation

$1.25B

2024

Growth Rate (y/y)

400%

2024

Funding

$228.50M

2024

Revenue

Sacra estimates that Together AI hit $130M in annualized recurring revenue (ARR) in 2024, up 400% year-over-year, off growing demand for generative AI applications and the need, particularly among startups, for developer tooling used to train, fine-tune, and deploy AI models.

Valuation

Together AI reached a $1.25 billion valuation through funding led by Salesforce Ventures.

Based on 2024 revenue of $130M and a $1.25B valuation, the company trades at a 9.6x revenue multiple.

Together AI has raised $228.5M in total funding, with notable backing from leading technology investors. Key strategic investors include Salesforce Ventures, Nvidia Corp., and Kleiner Perkins, along with participation from Coatue Management and Lux Capital.

Product

Together AI was founded in 2021 by Percy Liang, Chris Ré, and Vipul Ved Prakash with the mission of making AI development more accessible and affordable by leveraging open-source models.

Companies use cloud GPU hosts Together AI, CoreWeave, and Lambda Labs to use Nvidia (NASDAQ: NVDA) graphics processing units (GPUs) to train AI models on their datasets, fine-tune them, and deploy them into production.

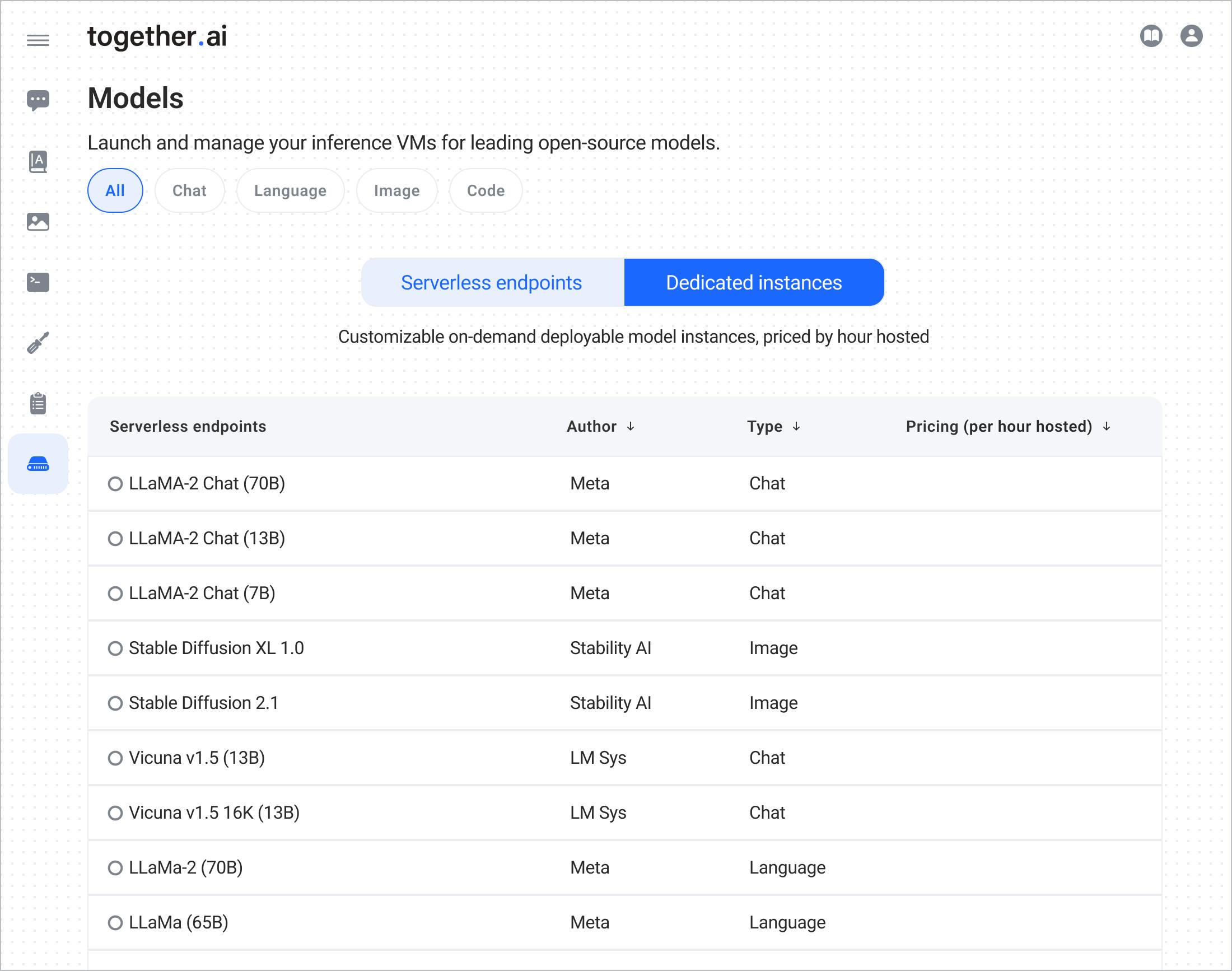

Together AI differentiated itself as a GPU cloud platform early by indexing on open source, allowing its customers access to 100+ open models from Mistral to Llama-2 to rapidly experiment with training different LLMs on their data.

Together AI's platform is designed to be an all-in-one solution for AI development, offering a suite of tools similar to Heroku but specifically tailored for AI workloads. This includes:

Access to GPU compute resources: Together AI provides access to high-performance GPU servers, sourced from a variety of providers including CoreWeave, Lambda Labs, and academic institutions. This allows developers to run compute-intensive AI workloads without having to invest in their own hardware.

Model hosting and serving: Together AI's platform makes it easy for developers to host their trained models and serve them via API endpoints, enabling seamless integration of AI capabilities into applications.

Fine-tuning and training tools: Together AI offers a range of tools and workflows for fine-tuning pre-trained models on custom datasets and training new models from scratch, allowing developers to adapt open-source models to their specific use cases.

Data management: The platform includes tools for managing the datasets used to train and fine-tune models, including data versioning, labeling, and preprocessing.

Experiment tracking and reproducibility: Together AI provides features for tracking and managing the various experiments and iterations involved in developing AI models, helping ensure reproducibility and facilitating collaboration.

Together AI's platform supports a wide range of popular open-source models, including Mistral, Llama-2, and its own RedPajama models.

Business Model

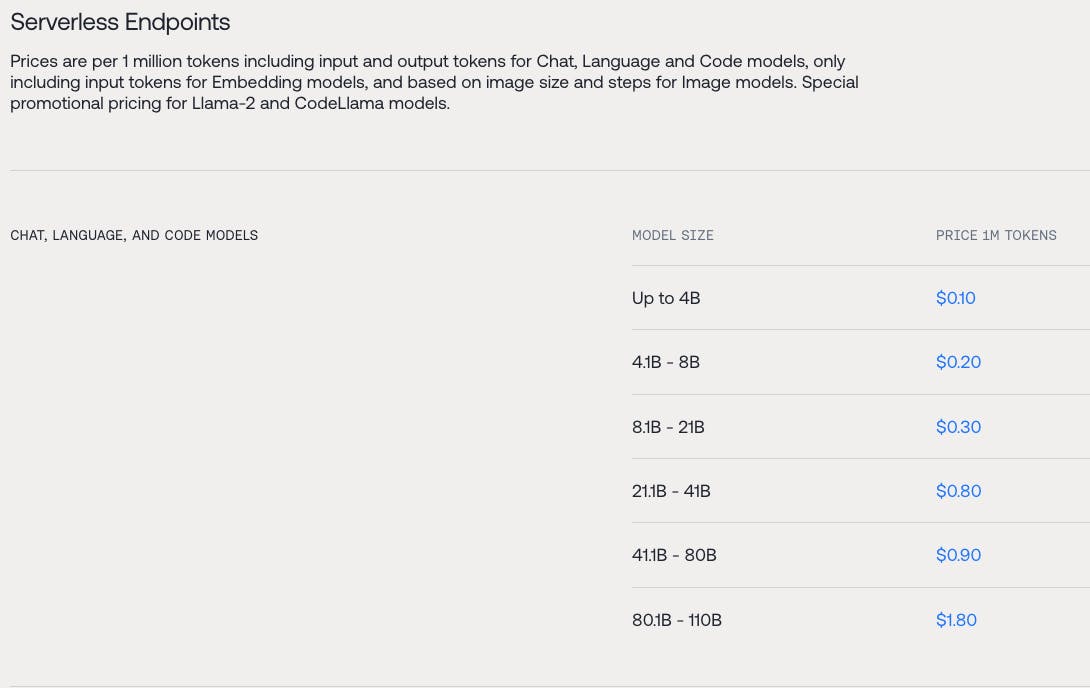

Together AI found product-market fit charging per-token, based on the number of API calls, as a developer experience-centric layer on top of CoreWeave and Lambda Labs’s per-hour pricing.

While CoreWeave and Lambda Labs focus on locking in multi-year reservations to recoup the fixed capex costs of their data centers and GPUs, Together AI operates a layer above, aligning their pricing with the spiky API volumes of startups training new models and launching new products.

Together AI's token-based pricing is attractive to customers, particularly early-stage startups and individual developers, who have variable or unpredictable workloads—particularly around training new models and launching new products.

Token-based pricing allows them to align their costs more directly with the value they derive from their AI models, and mitigates the expensive risk of paying for idle GPU time that accompanies per-hour pricing.

While Together AI does incur costs in sourcing GPU compute from various providers, its value-add comes from the bundling of this compute with a comprehensive set of AI development tools and the convenience of a token-based pricing model. This allows Together AI to charge a (small) premium over the base cost of the GPU compute itself while still offering cheaper compute than the hyperscalers by ~80%. Sacra estimates Together AI's gross margin at ~45%.

Competition

The cloud GPU market is becoming increasingly crowded, with a range of players vying to provide the tools and resources developers need to build and deploy AI models.

Big Cloud

The big three major cloud providers—Google Cloud ($75B in revenue in 2023), Amazon Web Services ($80B in revenue in 2023) and Microsoft Azure ($26B in revenue in 2023)—are all investing in their own GPU clouds, as well as developer tooling for training and fine-tuning models.

While their focus has traditionally been on proprietary models and tools, they are beginning to embrace open-source AI as well. For example, AWS now offers Hugging Face's open-source models on its SageMaker platform.

GPU clouds

Companies like CoreWeave and Lambda Labs offer GPU compute resources specifically designed for AI workloads. While they don't provide the same level of software tooling as Together AI, they do offer raw compute power at competitive prices.

Lambda Labs is more directly competitive as it positions itself as a better option for smaller companies and developers working on less intensive computational tasks, offering Nvidia H100 PCIe GPUs at a price of roughly $2.49 per hour, compared to CoreWeave at $4.25 per hour.

On the other hand, Lambda Labs does not offer access to the more powerful HGX H100—$27.92 per hour for a group of 8 at CoreWeave—which is designed for maximum efficiency in large-scale AI workloads.

Inference services

Since 2023, there have been a number of startups that have been founded or pivoted to serving AI models—as the core or a part of their business—particularly open-source LLMs. Besides Together AI, there are Anyscale, Deepinfra, Hugging Face, Perplexity, OpenRouter, Fireworks.ai, and others.

Research shows that Together AI, despite handling more traffic, demonstrates higher speed than other standalone inference services, with higher rate limits and better reliability. It prices its inference at roughly breakeven: lower than some other providers like Fireworks.ai, but higher than other providers that are operating at a loss.

TAM Expansion

Together AI's initial focus has been on providing infrastructure and tools for training and deploying open-source AI models. However, the company has several potential mechanisms to grow its total addressable market over time.

Higher-level services

As the AI market matures, there will likely be growing demand for higher-level, application-specific AI services. Together AI could leverage its platform to offer APIs for common AI tasks like text generation, image creation, and data analysis. This would allow it to capture more of the value chain and serve customers who don't want to build and maintain their own models.

Vertical expansion

While Together AI's platform is currently horizontal in nature, the company could develop specialized offerings for specific industries or use cases. For example, it could provide tools and models specifically tailored for healthcare, finance, or e-commerce applications.

Enterprise adoption

To date, Together AI has primarily served individual developers and small startups. However, Together AI does offer services like GPU clusters designed for organizations dealing with more traffic.

As larger enterprises increasingly adopt AI, there will be a significant opportunity to provide enterprise-grade tools and services. This could include features like advanced security, compliance, and governance capabilities.

Commoditization of compute

Unlike pure play GPU clouds like CoreWeave, Together AI is a beneficiary of the commoditization of compute—GPU prices going down lowers Together’s cost basis, while its per-token prices can expand as it focuses on winning on developer experience by delivering faster and more reliable inference across the widest variety of open source models.

During the rise of mobile in the 2010s, the first beneficiaries were semiconductor companies like Qualcomm (NASDAQ: QCOM) and ARM (NASDAQ: ARM), but app-layer companies like Apple (NASDAQ: AAPL) and Google (NASDAQ: GOOG) went on to capture 10x as much value—with NVIDIA reporting it has already hit peak margins and LLMs quickly falling in price, value in AI will begin shifting towards app-layer wearables, search engines, and verticalized SaaS for the enterprise.

Funding Rounds

|

|

|||||||||||||||

|

|||||||||||||||

|

|

|||||||||||||||

|

|||||||||||||||

|

|

|||||||||||||||

|

|||||||||||||||

| View the source Certificate of Incorporation copy. |

News

DISCLAIMERS

This report is for information purposes only and is not to be used or considered as an offer or the solicitation of an offer to sell or to buy or subscribe for securities or other financial instruments. Nothing in this report constitutes investment, legal, accounting or tax advice or a representation that any investment or strategy is suitable or appropriate to your individual circumstances or otherwise constitutes a personal trade recommendation to you.

This research report has been prepared solely by Sacra and should not be considered a product of any person or entity that makes such report available, if any.

Information and opinions presented in the sections of the report were obtained or derived from sources Sacra believes are reliable, but Sacra makes no representation as to their accuracy or completeness. Past performance should not be taken as an indication or guarantee of future performance, and no representation or warranty, express or implied, is made regarding future performance. Information, opinions and estimates contained in this report reflect a determination at its original date of publication by Sacra and are subject to change without notice.

Sacra accepts no liability for loss arising from the use of the material presented in this report, except that this exclusion of liability does not apply to the extent that liability arises under specific statutes or regulations applicable to Sacra. Sacra may have issued, and may in the future issue, other reports that are inconsistent with, and reach different conclusions from, the information presented in this report. Those reports reflect different assumptions, views and analytical methods of the analysts who prepared them and Sacra is under no obligation to ensure that such other reports are brought to the attention of any recipient of this report.

All rights reserved. All material presented in this report, unless specifically indicated otherwise is under copyright to Sacra. Sacra reserves any and all intellectual property rights in the report. All trademarks, service marks and logos used in this report are trademarks or service marks or registered trademarks or service marks of Sacra. Any modification, copying, displaying, distributing, transmitting, publishing, licensing, creating derivative works from, or selling any report is strictly prohibited. None of the material, nor its content, nor any copy of it, may be altered in any way, transmitted to, copied or distributed to any other party, without the prior express written permission of Sacra. Any unauthorized duplication, redistribution or disclosure of this report will result in prosecution.